We have had search engines for almost as long as we have had the Internet. From Ask Jeeves of the 1990s to the Google of today, billions of people around the world have turned to search engines with their questions and received enlightened information in return. Indeed, seek and you shall find is a saying that is becoming ever more relevant.

Because we rely on search engines to perform much of the research and decision-making activities in our lives, site owners hoping to make an impact have constantly coveted the rare distinction of being listed on the first page of search engine results. So much so that an entire industry of search engine optimization (SEO) has developed around delivering the best possible search rankings.

How Google Works

Before we can effectively optimize a webpage for search engines, we need to truly comprehend how the search engine ranking process works. Most site owners have a rough idea of web page optimization which involves meta tags, adding new web pages to an XML sitemap, and submitting these to search engines. If—like many people at the moment—you use a content management system such as WordPress, then the installed SEO plugins would do that job for you. However, a lot goes on between the time a page is published on the Internet to when it is found and indexed by search engines.

Google is the most used search engine in the world, boasting 91.86 percent of the global market share. Even Baidu, favored by the massive Chinese populace, can only account for a meagre 1.48 percent. Thus, a thorough awareness of the way Google works is integral to making the adjustments that will help your webpage stand out. Generally speaking, Google has three main stages of processing that lie behind its primary search engine functions. Let us go through them briefly.

Stage 1: Crawling

The first stage in the search engine process is the crawl. This is where the search engine robots travel across the Internet on a web of links and sitemaps to discover new web pages. In the early years of search engines, these robots were often called spiders, hence the descriptive imagery of ‘crawling’. These days—in true Google form—the robots are simply referred to as a singular GoogleBot. Nevertheless, this intelligent software manifestation continues to trawl the web ad infinitum, collecting data wherever it goes.

When GoogleBot comes across a page, it looks for specific information such as robots.txt instructions, script links, and mobile usability. It strips out the extraneous data and streamlines the source code of the page, then hands the ‘cleaned’ version over to Caffeine, Google’s indexing system.

Stage 2: Indexing

As with GoogleBot, Caffeine has a specific job. The platform renders each page in its unique browser, validating the HTML, figuring out the functions of JavaScripts, and identifying the overall document structure. During this process, pages perceived as having no value such as error pages or bad redirects are thrown out.

Space is a premium on servers and there is no room for broken pages. Then, the structured content on the page is extracted and analyzed to see what it is about. If the page is deemed to contain useful content, the page is added to the Google search index. Language and geographic location information are recorded, Google SafeSearch checks if the content is adult content, Google SafeBrowsing checks if the page contains computer security risks, and a ton of indicators are logged in preparation for the ranking stage.

Stage 3: Ranking

Now, just because a page has been included in the Google index does not mean that it will automatically produce good search results. Being indexed means that a page is eligible to be displayed in search engine result pages (SERPs) but does not guarantee where in the search results it shows up. Consider this: a search for ‘rank first on Google’ brings up 629 million results.

629 million results on the Google search for ‘rank first on Google’.

While your target search keywords may not be as popular, being listed on page 1 is a big difference from being listed on page 200. Hundreds of data points are analyzed in Google’s system to decide page ranking and nobody, not even Google itself, knows exactly how it is all determined. However, we do know some of the most influential factors. One of these is the presence of quality inbound links to your page.

Quality over Quantity

Imagine that you are attending a business networking event as a newcomer on the scene. You could go around the room handing out business cards to various potential clients or you could have a respected and reputable associate personally introduce you to them instead. Everyone in business knows that introductions are important and this principle translates to how your pages are perceived by Google as well.

Going further with this concept, the more third-party pages you have linking to your webpage the better, right? Wrong. Let us go back to the fictional networking event and replace your honorable associate with a carful of clowns. You would be attention-grabbing, perhaps, but the impression you leave would be dubious at best.

Domain Authority

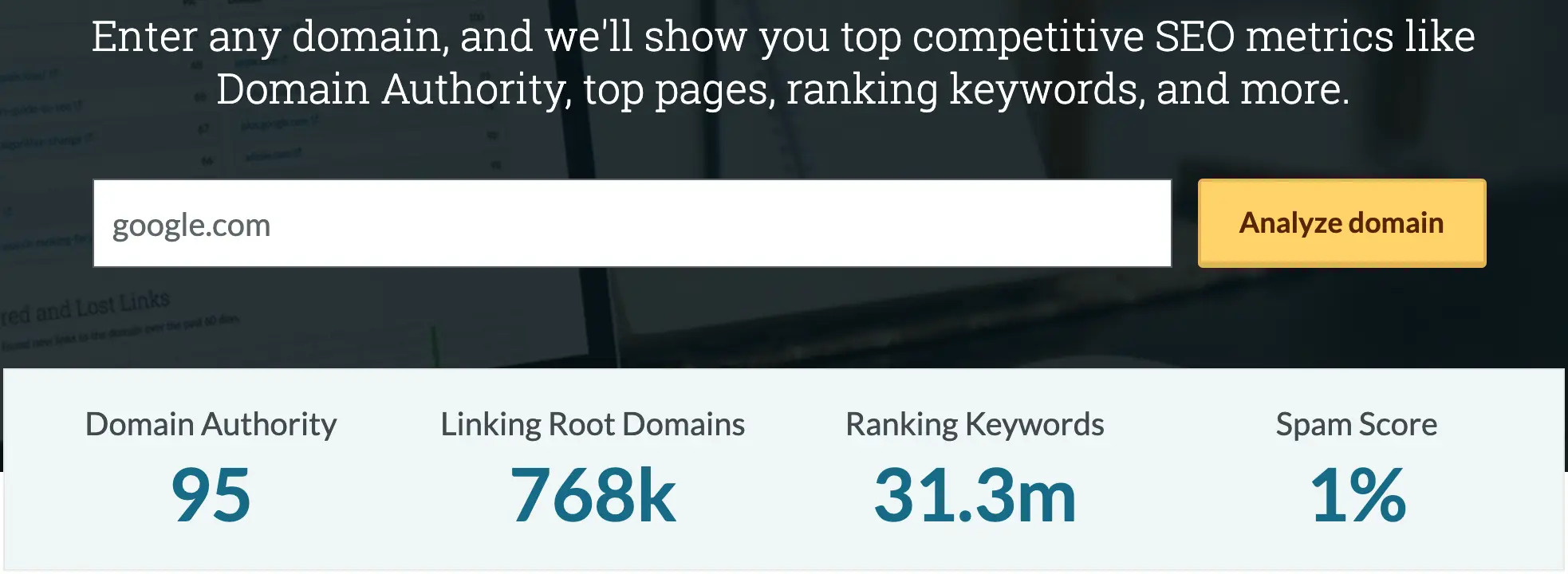

In order to judge the quality of inbound links, SEO specialists have come up with a metric called domain authority (DA). DA is a search engine ranking score that estimates how well a website will rank in SERPs. It is calculated by evaluating several factors including the total number of links on the domain and how often the domain has been displayed by Google in its search results. DA scores range from 1 to 100 on a scale that compares all domains.

Therefore, fresh websites start with a DA of one and work up to a better score as they accumulate more authoritative backlinks with time. In general, established sites with millions of verified external links such as wikipedia.org or amazon.com receive the highest DA scores, while small sites with few incoming links have low DA scores.

The DA score of Google.com.

With this in mind, businesses must consider the DA of their inbound links when investing in any link building efforts. Link building is the process of creating backlinks to a website to improve SERP rankings. Remember the early days of SEO where website owners published lines upon lines of keyword spam in ham-fisted attempts to manipulate SERP rankings? A similar trend is currently occurring with backlinks where people are buying hundreds of shoddy backlinks to their web pages in an endeavor to improve their rankings.

Unfortunately for them, Google views a large volume of low-quality backlinks with the same suspicion as a carful of clowns. Hence, building backlinks from authoritative domains is the best way to foster the reputation of your website and increase your rank in the all-seeing eyes of Google.

Content is Key

Just as crafty site owners are learning tricks and tips to boost their SERP rankings, search engine intelligence is evolving to recognize efforts to mislead the system. In 2015, Google published a paper where researchers proposed the innovative approach of ranking pages based on the accuracy of the information presented on the page. While this idea is not yet a reality, many of the updates made by Google in recent years continue to focus on the goal of delivering the most relevant and useful content for Internet users.

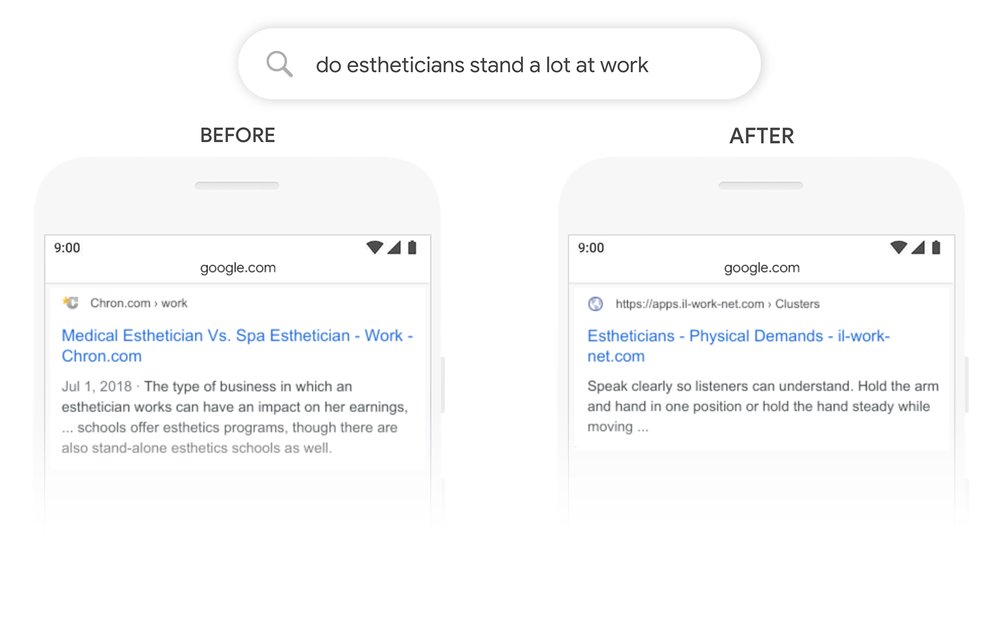

Part of this plan is the search algorithm introduced by Google in 2019—BERT. BERT, or Bidirectional Encoder Representations from Transformers, signaled a new era in search engine technology where Google shifted away from semantics and towards the use and understanding of natural language.

What the launch of BERT means for site owners is that it is no longer acceptable to publish pages crammed with random content and repeated keyword mentions. Useful, engaging, original content and organically-incorporated keywords are the approaches of the day as Google seeks out specific content that is desirable to its readers.

BERT helps Google to parse natural language and deliver better results.

In addition, Google rolled out its link spam update this July to reduce the impact of link spam on SERPs. Under this update, there are now specific requirements when site owners publish links that are commercial in nature such as affiliate links and sponsored posts. Certain practices such as keyword or link stuffing, publishing the same article on various sites, and posting articles with poor factual knowledge or research also connote a violation of Google’s webmaster guidelines.

So does this mean that sponsored posts and guest posts are a thing of the best? Not necessarily. However, it does mean that articles need to contain some educational or informative significance for users and not exist simply for the purpose of creating keyword or backlink spam.

Through what we know about the reinforced content-and-user-first principles of Google, the conclusion for site owners who want to achieve superior SERP rankings is to invest in well-written, distinctive, and original content that is geared towards enhancing the user experience. In any case, this gold standard of web content creation is often a prerequisite for publication on any high-DA sites worth their salt. In Google’s eyes, valuable content is the only logical reason why an authoritative site would link to an external page, so why not make it believable?

Google is geared to rank web pages by relevance and usefulness. While link building remains very important for SEO, the quality of links and the content that surrounds them are essential areas of emphasis. By producing professional content that is helpful to users, site owners can gain a better reputation with their clientele and earn backlinks from sought-after high-DA sites. At the same time, they build a mutually-beneficial relationship with Google that is based on substance instead of spam; a relationship will ultimately help site owners to rank higher in search engine result pages.

If you need help improving the Google ranking of your business, reach out to our team.